But when I fit this all like, pile(optimizer='rmsprop', loss='categorical_crossentropy', metrics=) Till here, my understanding is that, output from two models as x and y are merged and given as input to the third model. Y = Dense(500, activation='relu')(inputs2)įinal_model.add(Dense(100, activation='relu'))įinal_model.add(Dense(3, activation='softmax')) X = Dense(500, activation='relu')(inputs1) am trying to merge output from two models and give them as input to the third model using keras sequential model. Note: I used the model.summary () method to provide the output shape and parameter details. We can do this all by using a single line of code, sort of. The role of the Flatten layer in Keras is super simple: A flatten operation on a tensor reshapes the tensor to have the shape that is equal to the number of elements contained in tensor non including the batch dimension. Then we can create out input layer with 784 neurons to handle each element of the incoming data. In this flattened array now we have 784 elements (28 * 28). We can do this by turning this multidimensional tensor into a one-dimensional array. To tackle this problem we can flatten the image data when feeding it into a neural network. Hence if you print the first image in python you can see a multi-dimensional array, which we really can't feed into the input layer of our Deep Neural Network. The images in this dataset are 28 * 28 pixels. You can understand this easily with the fashion MNIST dataset. function flattens the multi-dimensional input tensors into a single dimension, so you can model your input layer and build your neural network model, then pass those data into every single neuron of the model effectively. Keras flatten class is very important when you have to deal with multi-dimensional inputs such as image datasets. Keras provides enough flexibility to manipulate the way you want to create a model. Of course both ways has its specific use cases. So basically, Create tensor->Create InputLayer->Reshape = Flattenįlatten is a convenient function, doing all this automatically. After, we reshape the tensor to flat form.

#Keras sequential model multi output how to#

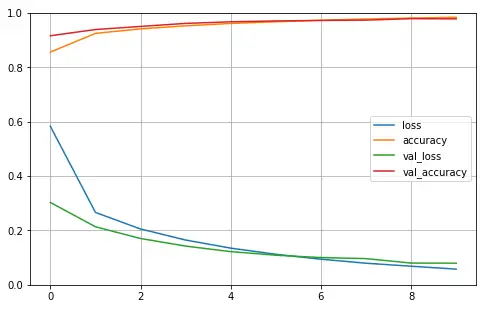

In this tutorial, we'll learn how to fit multi-output regression data with Keras sequential model in Python. We can easily fit and predict this type of regression data with Keras neural networks API. In the second case, we first create a tensor (using a placeholder)Īnd then create an Input layer. Multi-output regression data contains more than one output value for a given input data. Then you use a TimeDistributed layer wrapper in your. (Batch size, time steps, units) - with returnsequencesTrue. The output of an LSTM is: (Batch size, units) - with returnsequencesFalse.

Model.add((input_shape=(28, 28, 3)))#reshapes to (2352)=28x28x3 Now, if you want the output to have the same number of time steps as the input, then you need to turn on returnsequences True in all your LSTM layers.

#Keras sequential model multi output code#

The alternative method adds three more code lines. This may help to understand what is going on internally. Here I would like to present another alternative to Flatten function. Note: I used the model.summary() method to provide the output shape and parameter details.

The role of the Flatten layer in Keras is super simple:Ī flatten operation on a tensor reshapes the tensor to have the shape that is equal to the number of elements contained in tensor non including the batch dimension. For the inputs to recall, the first dimension means the batch size and the second means the number of input features. In fact, None on that position means any batch size. You should read it (1, 48) or (2, 48) or. The output shape for the Flatten layer as you can read is (None, 48).

If we take the original model (with the Flatten layer) created in consideration we can get the following model summary: Layer (type) Output Shape Param #įor this summary the next image will hopefully provide little more sense on the input and output sizes for each layer. This is exactly what the Flatten layer does. So if the output of the first layer is already "flat" and of shape (1, 16), why do I need to further flatten it?įlattening a tensor means to remove all of the dimensions except for one. Then, the second layer takes this as an input, and outputs data of shape (1, 4). So, the output shape of the first layer should be (1, 16). Therefore, the 16 nodes at the output of this first layer are already "flat". Each of these nodes is connected to each of the 3x2 input elements. From my understanding of neural networks, the model.add(Dense(16, input_shape=(3, 2))) function is creating a hidden fully-connected layer, with 16 nodes. However, if I remove the Flatten line, then it prints out that y has shape (1, 3, 4). pile(loss='mean_squared_error', optimizer='SGD') It takes in 2-dimensional data of shape (3, 2), and outputs 1-dimensional data of shape (1, 4): model = Sequential() Below is my code, which is a simple two-layer network. I am trying to understand the role of the Flatten function in Keras.

0 kommentar(er)

0 kommentar(er)